Troubleshooting Linux systems often involves digging through thousands of log lines to identify system issues in logs like syslog, dmesg, auth.log & etc. As a Linux system administrator, you know that log analysis can be time-consuming and error-prone. What if you could use AI to analyze those logs entirely offline, without uploading sensitive data or relying on the cloud?

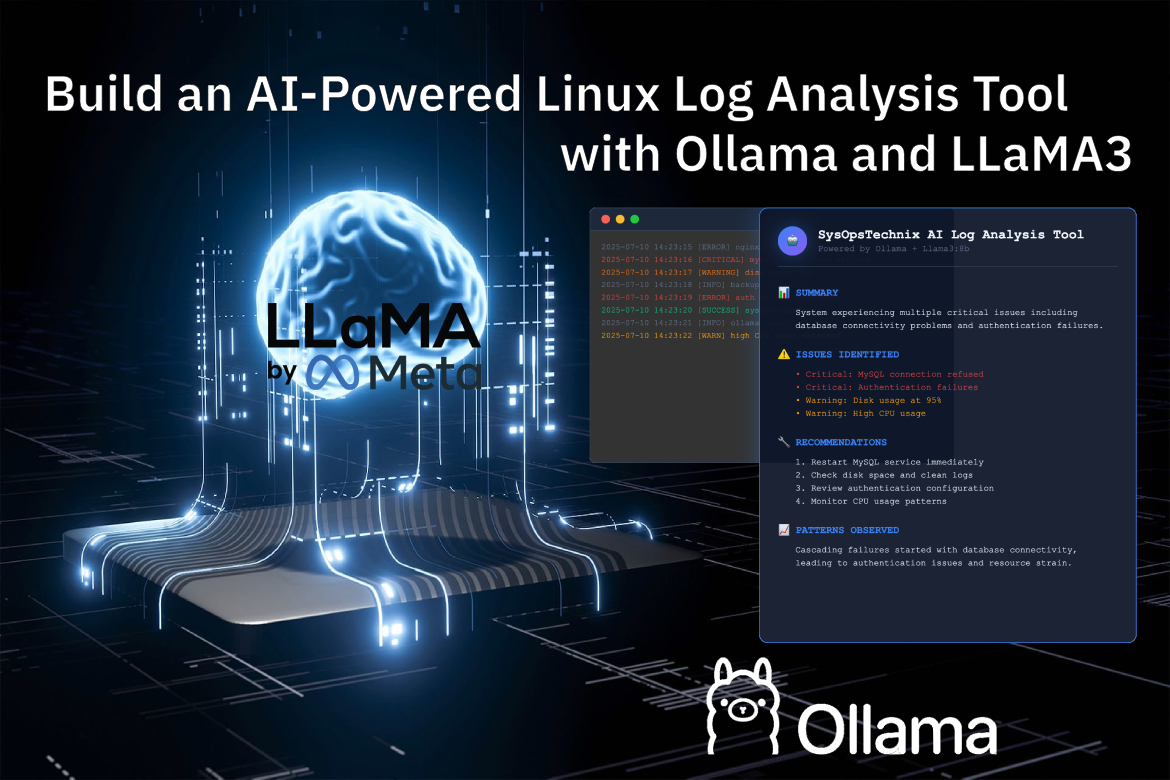

In this comprehensive guide, you’ll learn how to build a powerful AI log analysis tool that transforms cryptic log entries into human-readable insights using Ollama and LLaMA3:8b AI model.

Why AI-Powered Linux Log Analysis Matters

Let’s see some of the traditional log analysis challenges that Linux sys admins face:

- Volume Overload: Modern systems generate thousands of log entries daily.

- Pattern Recognition: Identifying recurring issues across multiple log files.

- Time Constraints: Manual analysis is very slow and inefficient.

- Context Loss: Missing connections between related log entries.

- Skill Dependency: Requires deep knowledge of various log formats.

Advantages of an AI Solution

The AI-powered log analysis tool addresses these challenges by:

✅ Automated Pattern Recognition: Identifies anomalies and recurring issues instantly.

✅ Natural Language Output: Converts technical logs into human-readable summaries.

✅ Severity Assessment: Prioritizes issues based on criticality.

✅ Contextual Analysis: Understands relationships between log entries.

✅ Time Efficiency: Reduces analysis time from hours to minutes.

What You Need to Get Started: System Prerequisites and Requirements

What is Ollama?

Ollama is a lightweight local runtime for large language models (LLMs). It allows you to run LLMs like LLaMA3, Mistral, or Code LLaMA directly on your machine with GPU/CPU support.

What is LLaMA3?

LLaMA 3 (version 3.1) is Meta’s latest open-source, stable, and powerful large language model (LLM), released in 2024. It has become a powerhouse for technical tasks, especially when deployed locally, including:

- Log analysis

- Code completion

- Infrastructure troubleshooting

Why LLaMA3 for Linux log analysis?

- LLaMA 3 (especially the 8B version) offers high-quality language understanding with a relatively small memory footprint, making it easier to run on local hardware (like desktops or edge servers).

- LLaMA 3 can understand longer and structured log entries, detect patterns, and relate log entries across time.

- You can fine-tune or prompt LLaMA 3 with specific examples of syslog formats (e.g., from rsyslog, systemd-journald, auth.log, dmesg, etc.).

- Since it runs on Ollama or your own server, you don’t need to send sensitive logs to the cloud.

- Easy to deploy with Ollama, which simplifies local inference.

Hardware Considerations

For optimal performance with Llama3:8b:

- CPU: Multi-core processor (4+ cores recommended)

- GPU: Optional but recommended for faster inference

- Memory: 16GB recommended for optimal performance

- Storage: 10GB free space for Ollama and models

Let’s Build the AI Log Analysis Tool for Linux

In this demonstration, I’m using a single server that hosts both the AI Linux log analysis tool and the Ollama AI inference engine. However, for production environments, you can deploy the AI inference engine on a separate high-performance server while installing only the lightweight AI log analysis tool on your production servers. Refer: Production Architecture

Install and Configure Ollama

Ollama is our AI inference engine that will run LLaMA3:8b locally:

curl -fsSL https://ollama.com/install.sh | sh

Verify the installation and the service status.

#Verify install:

ollama --version

#Check the service status

systemct status ollama

Download the Llama3:8b Model

# Pull the model (this may take 10-15 minutes)

ollama pull llama3:8b

# Verify model installation

ollama list

#Test it

ollama run llama3:8b

Create the Linux Log Analysis Tool

First, create a Python virtual environment:

#Install prerequisites

apt install python3.10-venv

#Create venv

python3 -m venv linux-log-analysis-tool

Next, within the virtual environment, create a new file named ai_log_analyzer.py and insert the following code into it.

#!/usr/bin/env python3

"""

SysOpsTechnix AI Log Analysis Tool for Linux System Administration

Powered by Ollama and Llama3:8b for intelligent log analysis

"""

import argparse

import json

import sys

import os

import re

import subprocess

from datetime import datetime

from typing import Dict, List, Optional

import requests

class LogAnalyzer:

def __init__(self, ollama_url: str = "http://localhost:11434"):

self.ollama_url = ollama_url

self.model = "llama3:8b"

def check_ollama_connection(self) -> bool:

"""Check if Ollama is running and accessible"""

try:

response = requests.get(f"{self.ollama_url}/api/tags", timeout=5)

return response.status_code == 200

except requests.RequestException:

return False

def ensure_model_available(self) -> bool:

"""Ensure the required model is available"""

try:

response = requests.get(f"{self.ollama_url}/api/tags")

if response.status_code == 200:

models = response.json().get('models', [])

for model in models:

if model.get('name') == self.model:

return True

# Try to pull the model if not available

print(f"Model {self.model} not found. Attempting to pull...")

result = subprocess.run(['ollama', 'pull', self.model],

capture_output=True, text=True)

return result.returncode == 0

except Exception as e:

print(f"Error checking model availability: {e}")

return False

def preprocess_log_content(self, content: str) -> str:

"""Preprocess log content to extract relevant information"""

lines = content.strip().split('\n')

# Filter out empty lines and very long lines that might be noise

filtered_lines = []

for line in lines:

line = line.strip()

if line and len(line) < 1000: # Skip extremely long lines

filtered_lines.append(line)

# If too many lines, take a sample

if len(filtered_lines) > 100:

# Take first 50 and last 50 lines

filtered_lines = filtered_lines[:50] + ["... (truncated) ..."] + filtered_lines[-50:]

return '\n'.join(filtered_lines)

def analyze_logs(self, log_content: str) -> Dict:

"""Analyze log content using Ollama"""

if not self.check_ollama_connection():

return {"error": "Cannot connect to Ollama. Make sure Ollama is running."}

if not self.ensure_model_available():

return {"error": f"Model {self.model} is not available."}

preprocessed_content = self.preprocess_log_content(log_content)

prompt = f"""

You are an expert Linux system administrator. Analyze the following log entries and provide a clear, human-readable summary.

Please provide your analysis in the following format:

## SUMMARY

Brief overview of what's happening in the logs

## ISSUES IDENTIFIED

List critical issues, warnings, and errors found

## SEVERITY ASSESSMENT

- Critical: Issues requiring immediate attention

- Warning: Issues that should be monitored

- Info: Normal operational messages

## RECOMMENDATIONS

Specific actions the system administrator should take

## PATTERNS OBSERVED

Any recurring patterns or trends in the logs

Here are the log entries to analyze:

{preprocessed_content}

Please be concise but thorough in your analysis.

"""

try:

response = requests.post(

f"{self.ollama_url}/api/generate",

json={

"model": self.model,

"prompt": prompt,

"stream": False

},

timeout=300

)

if response.status_code == 200:

result = response.json()

return {

"success": True,

"analysis": result.get("response", "No response generated"),

"timestamp": datetime.now().isoformat()

}

else:

return {"error": f"API request failed with status {response.status_code}"}

except requests.RequestException as e:

return {"error": f"Request failed: {str(e)}"}

def format_output(self, analysis_result: Dict, format_type: str = "text") -> str:

"""Format the analysis output"""

if "error" in analysis_result:

return f"❌ Error: {analysis_result['error']}"

if format_type == "json":

return json.dumps(analysis_result, indent=2)

# Text format

output = []

output.append("🔍 AI LOG ANALYSIS REPORT")

output.append("=" * 50)

output.append(f"📅 Generated: {analysis_result.get('timestamp', 'Unknown')}")

output.append("")

output.append(analysis_result.get('analysis', 'No analysis available'))

output.append("")

output.append("=" * 50)

output.append("Generated by SysOpsTechnix AI Log Analyzer using Ollama + Llama3:8b")

return "\n".join(output)

def main():

parser = argparse.ArgumentParser(

description="SysOpsTechnix AI-powered log analysis tool for Linux system administration",

formatter_class=argparse.RawDescriptionHelpFormatter,

epilog="""

Examples:

%(prog)s -f /var/log/syslog

%(prog)s -f /var/log/apache2/error.log --format json

tail -n 100 /var/log/auth.log | %(prog)s --stdin

%(prog)s -f /var/log/nginx/access.log -o analysis_report.txt

"""

)

parser.add_argument('-f', '--file', help='Log file to analyze')

parser.add_argument('--stdin', action='store_true',

help='Read log content from stdin')

parser.add_argument('-o', '--output', help='Output file (default: stdout)')

parser.add_argument('--format', choices=['text', 'json'], default='text',

help='Output format (default: text)')

parser.add_argument('--lines', type=int, default=0,

help='Number of lines to analyze from the end of file (0 = all)')

parser.add_argument('--url', default='http://localhost:11434',

help='Ollama API URL (default: http://localhost:11434)')

args = parser.parse_args()

# Validate arguments

if not args.file and not args.stdin:

parser.error("Must specify either --file or --stdin")

if args.file and args.stdin:

parser.error("Cannot specify both --file and --stdin")

# Read log content

try:

if args.stdin:

log_content = sys.stdin.read()

else:

if not os.path.exists(args.file):

print(f"❌ Error: File '{args.file}' not found", file=sys.stderr)

sys.exit(1)

with open(args.file, 'r', encoding='utf-8', errors='ignore') as f:

if args.lines > 0:

# Read last N lines

lines = f.readlines()

log_content = ''.join(lines[-args.lines:])

else:

log_content = f.read()

except Exception as e:

print(f"❌ Error reading log content: {e}", file=sys.stderr)

sys.exit(1)

if not log_content.strip():

print("❌ Error: No log content to analyze", file=sys.stderr)

sys.exit(1)

# Analyze logs

print("🔄 Analyzing logs with AI...", file=sys.stderr)

analyzer = LogAnalyzer(args.url)

result = analyzer.analyze_logs(log_content)

# Format and output result

formatted_output = analyzer.format_output(result, args.format)

if args.output:

try:

with open(args.output, 'w', encoding='utf-8') as f:

f.write(formatted_output)

print(f"✅ Analysis saved to {args.output}", file=sys.stderr)

except Exception as e:

print(f"❌ Error writing output file: {e}", file=sys.stderr)

sys.exit(1)

else:

print(formatted_output)

if __name__ == "__main__":

main()

Make the ai_log_analyzer.py executable:

chmod +x ai_log_analyzer.py

Create a symbolic link for easy access to the tool.

ln -s $(pwd)/ai_log_analyzer.py /usr/local/bin/ai-log-analyzer

Command Line Options

This tool supports various command-line options for flexibility:

# Basic usage

ai-log-analyzer -f /var/log/syslog

# Analyze last 100 lines

ai-log-analyzer -f /var/log/auth.log --lines 100

# JSON output for automation

ai-log-analyzer -f /var/log/nginx/error.log --format json

# Pipe input from other commands

tail -f /var/log/apache2/access.log | ai-log-analyzer --stdin

# Save analysis to file

ai-log-analyzer -f /var/log/kern.log -o analysis_report.txt

#For Help

ai-log-analyzer --help

Sample AI Linux Log Analysis Reports

Note: Analysis processing time depends on your Ollama server’s performance. Complex log files may take several minutes to analyze.

Syslog AI Analysis

Linux Auth Log AI Analysis

Web Access Log AI Analysis

Production Environment Architecture

For multi-server production environments, consider deploying a dedicated Ollama server instead of installing on each production server:

Centralized Ollama Server Benefits

✅ Resource Optimization: A single powerful server handles all AI processing

✅ Reduced Overhead: Production servers remain lightweight

✅ Consistent Performance: Dedicated resources for AI inference

✅ Easier Maintenance: Update models in one location

✅ Cost Efficiency: Single GPU-enabled server vs multiple installations

Conclusion and Next Steps

You’ve successfully built a powerful AI-powered log analysis tool that can transform your system administration workflow. This tool provides:

- Intelligent log interpretation using state-of-the-art AI

- Human-readable summaries of complex technical logs

- Automated issue identification and severity assessment

- Flexible integration with existing monitoring systems

Next Steps

- Customize for Your Environment: Enhance analysis accuracy by customizing prompts for specific use cases:

- Cron Job Integration: Automate log analysis with scheduled cron jobs

- Integration with Logrotate: Configure logrotate to trigger analysis in postrotate.